As part of of our work for EUROfusion's Horizon 2020 public engagement remit, we created a user-controlled simulation of MASCOT, the remote handling robot used to maintain the Joint European Torus (JET) fusion reactor in Southern England.

About MASCOT

MASCOT is a bespoke system for remote maintenance of the JET reactor where "two snake-like booms reach into the reactor to deliver the remote operator’s hands and eyes. The ‘master-slave’ manipulator allows the operator to feel every action and over the years JET’s RHS operators have become highly skilled at the most dextrous tasks."

Designing the Simulation

Whilst the manipulation of MASCOT is highly complex and operators need many hours of training, Our simulation was required to give exhbition visitors an insight into the complexities of manipulating the robot with minimal onboarding. To do this we merged a fullscreen third-person view of the robot in the vacuum vessel of the reactor overlaid with three of MASCOT's many on-body cameras. This gave a sense of the multiscreen view that remote operators experience during operation.

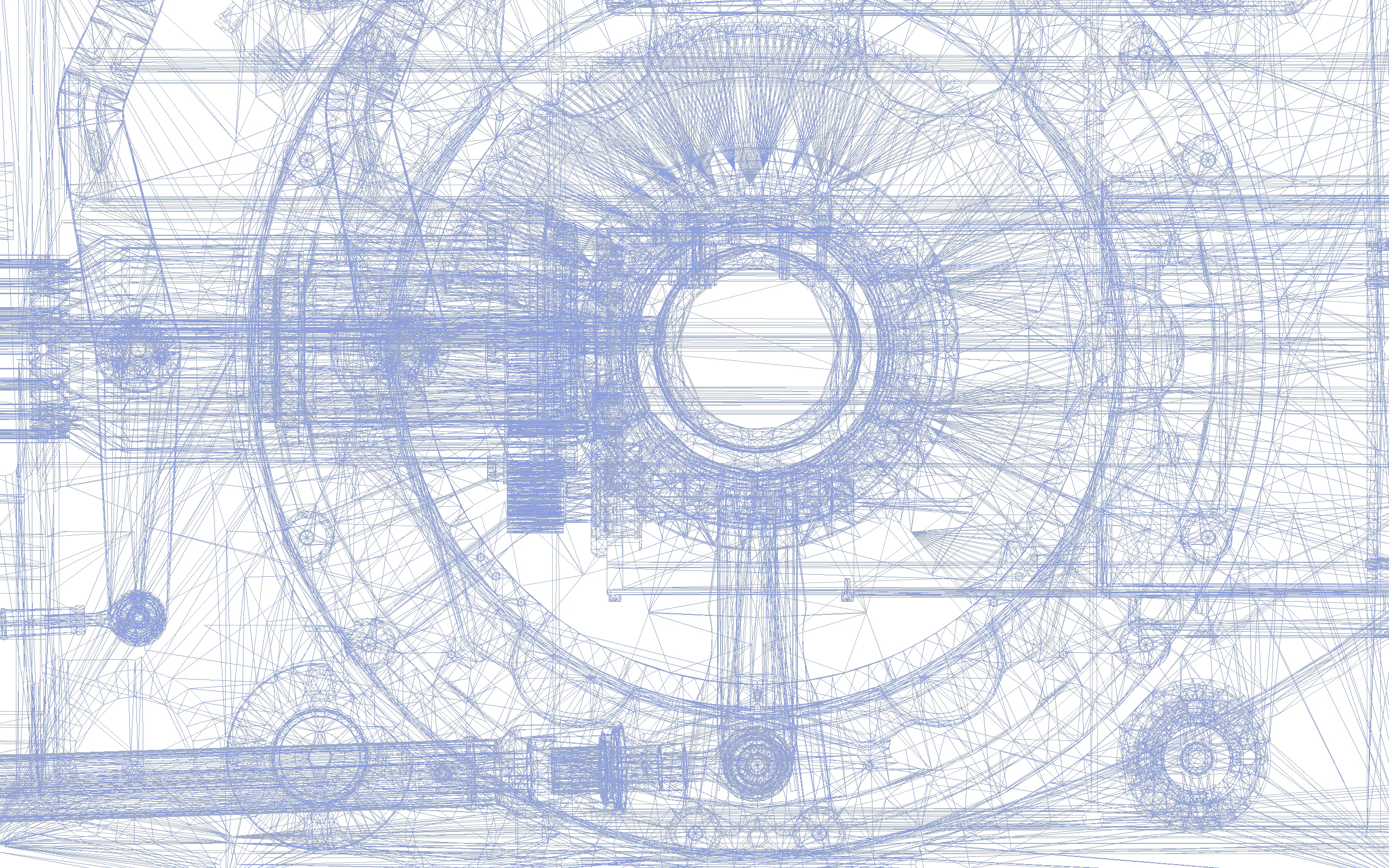

The Remote Handling Control Room (RHCR) at the United Kingdom Atomic Energy Authority (UKAEA)

Wireframe view of the main body of the MASCOT 3D model

Selecting and Optimising Models

We were fortunate that the team at RACE (Remote Applications in Challenging Environments) already had highly detailed models of both JET and MASCOT and we received over 5GB of 3D files. We'd selected Unity to run the simulation and a great deal of polgon reduction was required to optimise the models for playback on a PC running state of the art graphics hardware. We switched to a closer view on the reactor which enabled us to omit unseen parts of the JET model and removed the boom from MASCOT. This gave an intimate feel to the simulator and we were ready to move onto the interaction design.

Post-touch Interaction

Given post-pandemic health and safety considerations for public exhibit, we implemented hands-free manipulation of the robot using Ultraleap sensors for hand-tracking.

The experience provided a guided process which introduced users to gesture-control the grippers, arms, and main body as well as use three additional views from the cameras mounted in these areas.

Users were then able to rotate the main body, extend arms and grip objects in order to perfom the main task: replacing a tile on the divertor.

Reducing Complexity, Increasing Engagement

Following early user-testing, we reduced both the number of actions users could perform and the steps in the task. We were concerned that this far shorter experience; around 60 seconds, would be less engaging for end users. However, we noted during testing, that users would play multiple times, refining their gestures as they repeated the process.

Behaviours seen during testing werre borne out when the exhibit was displayed in public. Far from these simplifications making for a banal experience, the reduced level of interaction and simplification of the task lowered the barrier to entry and the simulator proved very popular.